The Learning Loop: How LLMs Evolve Through Data, Feedback, and Fine-Tuning

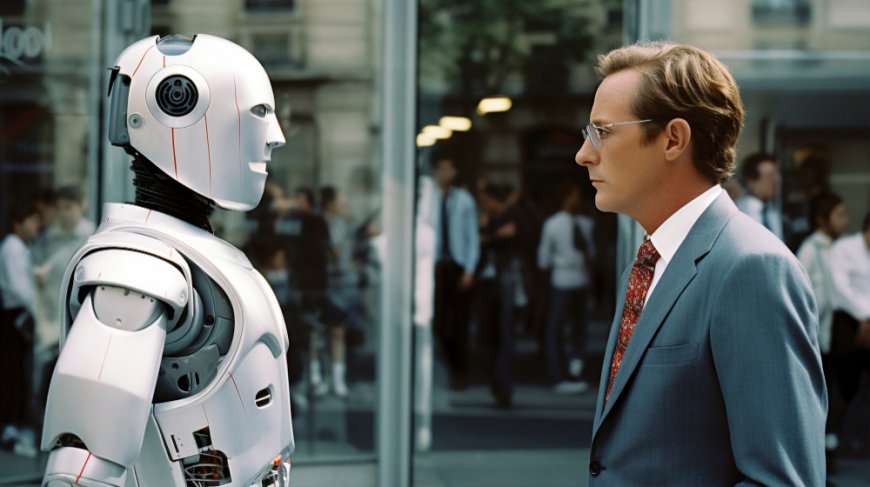

Large Language Models (LLMs) don’t just emerge fully formed—they evolve through iterative cycles of training, testing, and refinement.

The development of large language models has gone from curiosity to cornerstone. Whether answering a medical question or co-authoring a screenplay, LLMs today feel remarkably fluent. But this fluency isnt innateits earned through repeated exposure, error correction, and adaptive learning.

At the heart of LLM evolution is thelearning loop: a continuous cycle of data ingestion, model training, user interaction, and refinement. Like a student that improves with every lesson, the machine mind gets better each time it loops through the process.

Lets break down how this loop powers todays most advanced AI.

1.Data Collection: The Foundation of Learning

Before a model can generate language, it must first absorb it. The loop begins with massive-scale data collection, including:

-

Books, websites, news articles

-

Scientific journals and encyclopedias

-

Open-source codebases and documentation

-

Social media posts, forums, and conversations

This raw input gives the model breadth and depth in topics, dialects, and formats. But raw data is messyso engineers filter it for quality, remove duplicates, and enforce content safety filters.

The better the data, the stronger the foundation. In the loop, this stage can repeat with updated datasets, enabling the model to learn from newer trends, facts, and language patterns.

2.Tokenization and Preprocessing: Language to Numbers

LLMs dont see wordsthey see tokens and numbers.

Text is split into chunks called tokens, then converted into vectors (numerical representations). For example, ChatGPT is smart might become a sequence like [2048, 301, 78].

This preprocessing step allows language to be processed by neural networks, the computational core of LLMs. It also sets the stage for efficient learning, because similar words have similar representations in vector space.

3.Pretraining: Building General Intelligence

Once data is tokenized, the model undergoes pretrainingthe phase where it learns the structure and logic of language by predicting the next token in a sentence.

For example:

Input: The Eiffel Tower is located in

Model prediction: Paris

Through billions of such predictions, the model learns grammar, facts, tone, and even reasoning patterns.

This phase builds general intelligence, but not yet aligned intelligence. The model can generate, but doesnt always know what to say or how to say it helpfully.

4.Fine-Tuning: From Generalist to Specialist

The next phase in the loop is fine-tuningwhere the model is trained on curated datasets or specific tasks to make it more useful and less prone to errors.

Fine-tuning might include:

-

Dialogue-based examples

-

Legal or medical datasets

-

Coding problem-solving tasks

-

Creative writing or summarization prompts

Fine-tuning can also make a model smaller and faster by focusing on specific use cases (e.g., healthcare chatbots or legal AI assistants).

This stage is key to aligning the model with real-world goals.

5.Human Feedback: Teaching the Model What We Want

Even after fine-tuning, LLMs may give unhelpful or inappropriate answers. Thats where human feedback comes in.

Using methods like Reinforcement Learning from Human Feedback (RLHF), developers guide models with input from real people who rate or correct outputs.

For example:

-

If the model answers rudely, a human flags it.

-

If it answers vaguely, a better example is provided.

-

If its helpful, the model is rewarded.

This process teaches the model to prefer honesty, helpfulness, and safetyessential qualities for deploying AI in real-world settings.

6.Evaluation and Testing: Closing the Loop

Once feedback is incorporated, models are retested:

-

Do they hallucinate fewer facts?

-

Are responses more aligned with user intent?

-

Is reasoning more accurate and consistent?

Evaluations include automatic benchmarks, human studies, and stress tests (like adversarial prompts or ethical dilemmas).

This continuous testing closes the loopproviding insights that send developers back to the drawing board with better data, updated fine-tuning goals, or new alignment strategies.

The result: a smarter, safer, more capable model.

7.Deployment and Real-World Learning

After development, models are released into products: chatbots, copilots, research assistants, and creative tools. But the learning loop doesnt stop at deployment.

Real-world usage provides:

-

Edge cases the model hasnt seen

-

Feedback from diverse users

-

Signals of failure or unexpected behavior

Some organizations implement online learning or feedback retraining pipelinesletting the model improve over time based on real interactions, much like how humans grow through conversation.

8.Why the Learning Loop Matters

The power of LLMs isnt in any one stageits in the iteration. Like a sculptor refining their work with each pass, AI developers shape these models by:

-

Adding better data

-

Adjusting model weights

-

Teaching with feedback

-

Re-evaluating and improving

The loop is how we move from raw data to refined dialoguefrom statistical prediction to something that feels like understanding.

Conclusion: Intelligence is Iterative

The machine mind doesnt awakenit evolves. Large Language Models are the result of countless cycles of training, correction, and refinement. Each loop makes the model smarter, safer, and more aligned with human intent.

As LLMs become embedded in everything from customer support to education and design, their development must remain dynamic. Because true intelligencewhether human or artificialisnt fixed. Its learned, tested, and constantly refined.

The learning loop is how machines learn to speak our languageand how we ensure they keep learning to speak it better.